Autonomys Network × Tradable

Autonomys is pleased to share that Tradable has integrated Auto Drive, gaining direct access to Autonomys’ Distributed Storage Network (DSN) for permanent, verifiable, on-chain data storage.

This integration enables Tradable’s privacy-first AI agent, SenseAI, to persist encrypted AI memory on-chain without relying on centralized databases or off-chain trust assumptions.

What the Integration Is

Tradable is using Auto Drive as the storage layer for SenseAI’s long-term memory. Auto Drive is Autonomys’ S3-compatible gateway to its Proof-of-Archival-Storage (PoAS)-powered DSN, where stored data inherits the permanence and verifiability guarantees of the Autonomys Network itself.

In practice, this means AI-generated data produced by SenseAI is encrypted and written directly to Autonomys’ decentralized storage layer, where it remains tamper-resistant and independently verifiable over time.

How It Works (Technical Overview)

The following architecture and workflow are implemented by Tradable and shared here for clarity and accuracy.

TEE-to-Storage Pipeline

- SenseAI’s Oracle runs inside an Oasis ROFL Trusted Execution Environment (TEE).

- Once an AI response is generated inside the enclave, the data is encrypted using AES-GCM, with encryption keys derived from the user’s wallet signature.

- The encrypted payload is uploaded directly to Auto Drive, anchoring it to Autonomys’ DSN.

Structured, Encrypted Data Storage on Auto Drive

Tradable stores three distinct encrypted data types via Auto Drive.

- Conversation and Metadata: Immutable IDs and mutable metadata (like conversation titles) are stored as JSON files.

- Message History: Every user prompt and AI response is stored as an individual, immutable file referenced by a CID.

- Search Indices: Tradable stores encrypted search deltas on Auto Drive, allowing the client to build a local, private search index of their history.

Why Tradable Chose Auto Drive

Below are the reasons Tradable shared for integrating Auto Drive.

- User Sovereignty

SenseAI is designed as a privacy-first agent. User history is not stored in centralized databases. Auto Drive enables users to retain true ownership of their AI memory while benefiting from on-chain permanence. - Performance

Upload speeds from Tradable’s Oracle to Autonomys, along with retrieval via the Auto Drive Gateway, have exceeded the performance requirements needed for a real-time chat experience. - Encryption Compatibility

Autonomys’ storage layer reliably handles encrypted payloads without corruption, supporting Tradable’s end-to-end privacy model.

Why This Matters

This integration demonstrates a working architecture for verifiable, privacy-preserving AI systems.

AI reasoning and memory can be generated inside secure execution environments, encrypted by the user’s cryptographic identity, and stored on-chain in a way that is permanent, tamper-resistant, and independently verifiable.

Autonomys is proud to support the Tradable team and their work on SenseAI. Their focus on user sovereignty, privacy, and technical rigor closely aligns with how we think about long-term, trustworthy AI infrastructure. For Autonomys, this integration reflects a growing class of real workloads using Auto Drive not just as decentralized storage, but as long-term memory infrastructure for AI agents, where data integrity, provenance, and user ownership are non-negotiable.

About Tradable

Tradable, currently in public beta and preparing for a Q1 2026 launch, is pioneering a retail-focused, AI-powered automated trading platform that combines community-built trading bots with high-frequency trading capabilities — a unique offering in the crypto space.

The Problem: Retail traders face growing disadvantages, with now only 1 in 20 achieving consistent profits as institutions refine their ability to extract value from retail traders through superior tools, strategies, and dedicated teams. Barriers like emotional trading, missed opportunities, and relying on unsophisticated algorithmic tools like DCA/Grid bots leave retail traders at a significant disadvantage, reinforcing a system where institutions dominate.

Tradable’s Mission: To democratize algorithmic trading to reverse this trend, empowering retail investors to compete effectively and regain the potential for consistent profitability.

January 2026 | End-of-Month Report

January focused on stabilizing network operations, completing outstanding end-of-year initiatives, and establishing a defined growth cadence for 2026. Highlights this month included launching ambassador benefit rollout, progressing numerous grant applications through defined review stages, and supporting Auto Drive adoption with both current and new partners. This report outlines material progress during the past month and the momentum heading into February.

Operations

January centered on closing commitments made in late 2025 and establishing a stable operational baseline for the year ahead. Cost-containment initiatives were completed, bringing operating expenses to a sustainable level and enabling continued focus on protocol development and Auto Drive adoption.

Investor and compliance support remained a priority. Year-end 2025 audit documentation was completed, and stakeholder materials were prepared to support financial reporting requirements. In parallel, coordination continued around infrastructure and process planning for the July 2026 stakeholder token claims distribution.

Ecosystem strategy remains intentionally selective, prioritizing integrations where permanent, verifiable data is foundational to the product rather than exploratory. The operating approach emphasizes reliability, execution quality, and long-term adoption.

Engineering & Protocol Updates

Network & Runtime

- Chronos runtime upgrades and mandatory client release

Chronos runtime upgrades were completed and a mandatory client release was deployed, improving runtime stability and ensuring predictable upgrade paths for node operators and builders across testnet and mainnet environments. - Core network stack upgrades

Substrate and libp2p were upgraded to improve performance, compatibility, and alignment with current ecosystem standards, strengthening security and interoperability. - Domain liveness reporting

An issue affecting Domain operator offline detection was resolved. Domains now report execution-layer liveness accurately, improving operational visibility and reliability. This important update will help pave the way for permissionless operator instantiation. - WebAssembly compilation target update

The WebAssembly compilation target was updated to the latest standard, supporting ongoing runtime compatibility and future execution-layer development.

Auto Drive

- Egress monitoring and archiving status fixes

Improvements to egress monitoring and archiving status handling were deployed, enhancing observability and correctness for users relying on Auto Drive for persistent storage.

Developer & Community Tooling

- Space Acres

A new version of Space Acres, Autonomys’ farming application, is now available. Download Space Acres to begin farming on the network.

Together, these updates strengthen network reliability, improve operational transparency, and support continued Domain development and Auto Drive usage.

Subspace Foundation

Ambassador Program

The Subspace Foundation completed the end-to-end rollout of ambassador benefits. This includes KYC via Persona, executed agreements through DocuSign, and a dedicated beneficiary application with supporting documentation and portal access.

The Subspace Foundation launched vesting and unlocks through Hedgey, with Auto EVM support added and ambassador vesting contracts deployed. Eligible ambassadors can independently claim and manage their allocations through the beneficiary portal.

Claim guide and FAQ: https://beneficiary.subspace.foundation/claim

Boosted Incentivized Staking — Guardians of Growth

The Subspace Foundation’s Guardians of Growth boosted incentivized staking initiative remains ongoing and continues to support broad participation and network security. The program includes a 5,000,000 AI3 allocation, distributed over approximately twelve months from its original launch date. Guardians of Growth has proven very popular with the community and has seen >37 million AI3 committed to operators, representing almost a third of unlocked supply. Participation in this program remains open to all token holders.

The Subspace Foundation’s Grants Program continues to gain traction, with a steady flow of new applications entering the pipeline each week. During January, applications progressed through defined evaluation stages:

- Initial review: 9 projects (5 new in January)

- Discovery: 9 projects

- KYC & agreement: 1 project

- Not advanced this round: 19 projects

The “not advanced this round” category maintains clarity on current decisions while preserving optionality for future consideration.

Builders working on permanent storage, agent infrastructure, or data availability are encouraged to apply.

Ecosystem & Developer Momentum

Auto Drive, Autonomys’ gateway to permanent on-chain storage on the DSN, continues to support production and near-production use cases across the ecosystem.

Partners already live or actively deepening their Auto Drive integration include:

- Gaia — Storage of full conversation histories and agent memory for verifiable, on-chain persistence alongside decentralized inference.

- Secret Network — Runs a proof-of-concept AI agent called Auto Secret Agent which archives agent reasoning and I/O to the DSN while running confidential compute inside a TEE.

- Tradable — SenseAI persists encrypted AI memory, including search indices, message history, and metadata on the DSN to avoid having to use centralized databases.

- MetaProof — Permanent storage for long-form media, codex artifacts, and narrative layers using content-addressed on-chain data.

- Baselight — Has onboarded Autonomys IPLD node datasets (the same content-addressed DSN data that powers Auto Drive) and built an Autonomys Insights dashboard so developers can query and visualize storage and chain activity in a structured way.

- Heurist — Integrated Auto Drive for permanent storage of research artifacts and AI-generated outputs.

- SpoonOS — Integrated Auto Drive into its AI framework, to provide decentralized, permanent storage services to its ecosystem developers.

Several partners are actively exploring deepening integration pathways, while additional projects are currently onboarding. Further ecosystem additions are expected to be announced throughout February. In parallel, a new builders program developed in collaboration with a major Web3 weather data network is scheduled to launch early in February.

Metrics — January Snapshot

- Staking: 37,843,634 AI3 staked as of January 30th, 2026

- Auto Drive usage: 128,810 files uploaded

- Partnerships: 61 total

- Announced Auto Drive integrations: 7

- Grants: 38 total applications

Ambassador Benefits (Subspace Foundation)

- Total vested (wAI3): 2,697,860.51568

- Total claimed (wAI3): 1,849,503.39653

- Active vesting plans: 30

- Total recipients: 88

Looking Ahead

In February, the focus is on continuing to ramp Auto Drive usage, announcing the first wave of grant recipients, increasing farmer participation as storage demand grows, supporting ambassadors through the claiming process, and launching a new builders program. We’re heading into a busy and exciting February. Stay tuned for additional updates and announcements!

Why 2026 Will Reward Data Infrastructure, Not Just AI Applications

How BlackRock’s 2026 Thematic Outlook Reinforces Autonomys’ Architectural Thesis

BlackRock’s 2026 Thematic Outlook marks a clear shift in how the next phase of technology adoption is being framed at the institutional level. Rather than focusing on consumer-facing AI applications or short-cycle innovation narratives, the report repeatedly returns to infrastructure: the physical, digital, and economic systems required to sustain AI at scale.

The message to investors and builders is explicit: infrastructure is no longer the back office — it’s the bottleneck. In 2026, the limiting factor for AI and tokenized systems is no longer model capability or capital; it’s whether the underlying data infrastructure can persist, scale, and remain trustworthy over time.

Autonomys Network was architected for precisely this inflection point. It is a storage-native Layer-1 built on the Subspace Protocol — designed around permanent, cryptographically verifiable, and globally scalable decentralized storage, because it identified early that data infrastructure is the hardest problem to solve, and optimized for it first.

Infrastructure Is the Binding Constraint of the AI Era

BlackRock is explicit that infrastructure, not applications, is now the connective tissue between ambition and reality:

“Driven by AI compute, national security, energy demand, and supply-chain resilience, infrastructure is the connective tissue linking economic ambition with real-world capacity.”

— BlackRock 2026 Thematic Outlook, p.12

This framing elevates data infrastructure to the same strategic plane as energy grids and logistics networks. In that context, decentralized systems are no longer evaluated on novelty alone, but on whether they can deliver durable capacity under real-world constraints.

Autonomys sits directly in this category. Its core innovation, Proof-of-Archival-Storage (PoAS), anchors consensus security to stored historical data rather than compute power or staked capital, making storage itself the scarce and valuable resource securing the network. Today that network is secured by a globally distributed base of farmers contributing over 50 PB of pledged storage — proof that infrastructure-first design is already live at scale.

AI Is Becoming Token-Intensive Because It Is Becoming Agentic

One of the most important technical observations in BlackRock’s outlook concerns the nature of AI workloads:

“Think of tokens as AI’s fuel: more computing power or tasks requires more tokens.”

— BlackRock 2026 Thematic Outlook, p.7

“Token intensity rises sharply as AI moves beyond chat and into reasoning.”

— BlackRock 2026 Thematic Outlook, p.7

“More complex tasks (not just more users) are driving the next leg of compute demand.”

— BlackRock 2026 Thematic Outlook, p.7

Reasoning systems and autonomous agents generate persistent data by design: memory states, decision logs, provenance trails, and evolving context that must remain accessible over time.

Autonomys’ Distributed Storage Network (DSN) is built for exactly these conditions. The network combines scalable bandwidth, erasure-coded replication, and native on-chain indexing to support high-throughput workloads where data must remain tamper-resistant and independently verifiable. The Autonomys Agents Framework and Auto Drive are built so that agentic systems can use the DSN for persistent memory and context — agentic workloads are not an edge case in this architecture; they are a primary design target.

Physical Constraints Elevate Distributed Architectures

BlackRock repeatedly emphasizes that AI infrastructure is now constrained by physical realities, particularly reliable energy availability:

“For AI infrastructure, power availability & reliability remains a key constraint.”

— BlackRock 2026 Thematic Outlook, p.11

“Power availability remains a key constraint in AI infrastructure.”

— BlackRock 2026 Thematic Outlook, p.13

These constraints challenge assumptions embedded in purely centralized infrastructure models. Autonomys does not replace centralized compute; it decouples long-term data availability from single-region or single-provider capacity.

The DSN is supported by a globally distributed network of farmers (miners) contributing disk space using commodity SSDs. Unlike systems where data lives on external cloud infrastructure or auxiliary storage layers, data stored on Autonomys is written directly to the consensus chain itself. That same data is held on farmers’ hard drives and actively used by PoAS to secure the network. This is a novel architectural design, not because storage is added alongside consensus, but because storage and consensus are the same mechanism. By anchoring both security and availability to real, widely distributed storage rather than specialized hardware, centralized providers, or geographic concentration, Autonomys treats resilience and distribution as foundational properties, not optional optimizations.

Tokenization Is Maturing Into Record Infrastructure

BlackRock’s treatment of tokenization is operational rather than speculative:

“As the thematic landscape changes — so may the ways we invest in it, including both private, public, and tokenized exposures.”

— BlackRock 2026 Thematic Outlook, p.2

“The rise of stablecoins may open opportunities to go beyond cash into tokenized assets like private credit.”

— BlackRock 2026 Thematic Outlook, p.16

“Tokenized assets: reflect ownership rights in token format that can be traded, settled, and recorded on a blockchain.”

— BlackRock 2026 Thematic Outlook, p.18

The word “recorded” is critical. Tokenized systems require durable metadata, provenance records, and historical proofs that remain independently verifiable long after execution or settlement.

This shift is no longer theoretical. In January 2026, the New York Stock Exchange announced it is developing a platform for tokenized securities, stating that it will support “trading and on-chain settlement of tokenized securities”, subject to regulatory approval. The announcement underscores that tokenization at institutional scale depends not just on execution and settlement, but on durable, trustworthy records that persist over time.

Settlement layers need a data layer: tokenized assets require durable, verifiable records of what’s been tokenized. The DSN, accessed via Auto Drive, is built to be that record layer.

Autonomys does not position itself as a financial settlement layer; its infrastructure is directly relevant wherever tokenized systems require durable, trust-minimized records rather than ephemeral or centralized storage.

Auto Drive as the Adoption Surface for Infrastructure-First Systems

Infrastructure only becomes strategic when it is usable.

Auto Drive is Autonomys’ gateway to the DSN, exposing permanent on-chain storage through an interface designed to mirror familiar cloud patterns. It provides an S3-compatible API, content-addressed storage, optional end-to-end encryption, and direct integration with Autonomys’ PoAS secured blockspace. For builders, that means permanent storage with semantics they already know, without needing to learn a new consensus layer, and with cryptographic integrity guaranteed by the network.

This design choice matters in a world where institutions and developers increasingly demand infrastructure guarantees without operational friction. Auto Drive and the Auto SDK allow builders to write data once and rely on the network itself, rather than off-chain pinning or centralized trust, to ensure long-term availability and verifiability.

2026 Rewards Architectures Built for Time

BlackRock’s 2026 outlook does not suggest a slowdown in AI innovation. It suggests a re-pricing of what actually matters.

- AI systems are becoming persistent rather than ephemeral.

- Tokenization is becoming record-oriented rather than speculative.

- Infrastructure constraints are becoming binding rather than theoretical.

Autonomys is built for that shift. It does not compete on short-cycle application features; it competes on whether data can last, remain accessible, and be independently verified over time.

In 2026, that distinction moves from niche to necessary and for the builders and institutions already planning for it, the infrastructure is ready.

Begin storing in minutes. Upload once. Store forever.

Access Autonomys’ permanent on-chain storage today:

Developer Hub: https://develop.autonomys.xyz

Auto Drive: https://ai3.storage

References & Further Reading

BlackRock

BlackRock 2026 Thematic Outlook — Infrastructure as binding constraint; AI compute and token intensity; power and energy constraints; institutional framing of tokenization (pp. 2, 7, 11–13, 16, 18).

https://www.ishares.com/us/literature/presentation/2026-thematic-outlook-stamped.pdf

New York Stock Exchange (NYSE)

The New York Stock Exchange Develops Tokenized Securities Platform — Announcement of a platform for trading and on-chain settlement of tokenized securities; institutional validation of tokenization as market infrastructure and durable record-keeping layer (January 19, 2026).

https://ir.theice.com/press/news-details/2026/The-New-York-Stock-Exchange-Develops-Tokenized-Securities-Platform/default.aspx

Autonomys Network

Developer Hub — Auto Drive, Auto SDK, and developer adoption.

https://develop.autonomys.xyz

Documentation — DSN, farming, and network architecture.

https://docs.autonomys.xyz

Auto Drive — Gateway to permanent on-chain storage.

https://ai3.storage

Autonomys Network — 2025 End-of-Year Report

To our community, partners, and supporters,

2025 was the year Autonomys transitioned from foundational infrastructure to a fully activated, production-ready network. We entered the year with a live Proof-of-Archival-Storage (PoAS) mainnet and a growing distributed storage network (DSN). We close the year with Domains deployed, Auto EVM live, token transfers enabled, exchange markets established, and an expanding ecosystem of builders, partners, and agents integrating real workloads.

Across consensus, storage, execution, and token infrastructure, Autonomys now operates as a vertically integrated, AI-native Layer 1 designed to support permanent, verifiable data and modular on-chain agents at scale.

At the protocol level, the year was defined by the delivery and hardening of Mainnet Phase-2. At the execution layer, we activated Auto EVM to bring permissionless smart contracts online. At the economic layer, we enabled token transfers, launched the Guardians of Growth Staking Incentives Program, and established liquid markets across multiple centralized and decentralized venues.

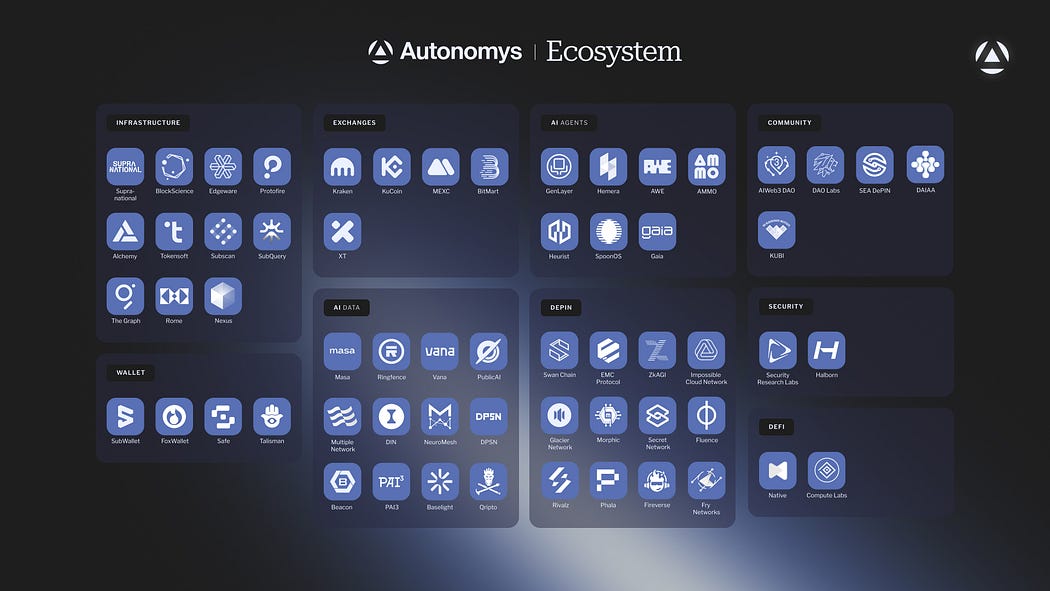

By December 2025, the Autonomys ecosystem encompassed 60 partners and integrations across infrastructure, exchanges, AI agents, AI data, DePIN, wallets, security, and DeFi. These collaborations reflect a deliberate focus on permanence, verifiability, and scalable AI-native execution rather than integration volume alone.

With consensus, execution, storage, and liquidity now successfully in place, our focus has sharpened toward long-term cryptographic resilience and permissionless scalability. Autonomys is preparing early for challenges and demands that may emerge years from now, ensuring the network remains durable, trustworthy, and capable of supporting the next generation of AI-native workloads.

This is our 2025 year in review, with a brief look at the priorities and developments on the horizon for 2026.

At the protocol level, 2025 was defined by the delivery and hardening of Mainnet Phase-2.

- In January, the consensus chain surpassed 1 million blocks, demonstrating stability, reliable block production, and sustained support from more than a thousand farmers contributing hundreds of petabytes of SSD capacity.

- Throughout Q1, engineering focused on Domain readiness, including extensive Cross-Domain Messaging (XDM) stress testing with roughly 400,000 transactions processed on the Taurus testnet. These tests surfaced specific edge cases that were resolved prior to launch.

- Execution performance improved significantly, with the Taurus EVM receiving optimizations that achieved above 2,000 TPS in internal testing.

- DSN retrieval paths were strengthened to reduce latency for Auto Drive cache misses, improving the performance profile for AI, agent, and data-intensive workloads.

This preparation culminated mid-year in the formal activation of Mainnet Phase-2.

Token transfers were enabled at the protocol level on July 16, 2025, followed shortly by the launch of Auto EVM, introducing permissionless smart-contract deployment integrated with the DSN.

These steps completed the transformation from a foundational consensus chain into a full-stack execution environment where agents, applications, and data-rich workloads can operate on a single, coherent network.

Security remained a primary focus throughout 2025.

SRLabs concluded the 2025 audit cycle covering Domains, Cross-Domain Messaging (XDM), Proof-of-Time (PoT), Proof-of-Archival-Storage (PoAS), and fraud proofs, with all critical findings addressed before mainnet launch, and remaining optimizations tracked publicly as part of our continuous security commitment.

Combined with the performance and reliability work carried out during Q1, these efforts ensured that Mainnet Phase-2 went live on a robust foundation designed to withstand operational stress, adversarial behavior, and long-term usage patterns.

2025 also marked the year Autonomys began to fully realize its role as infrastructure for AI-native development.

Auto Agents Framework

Major upgrades to the Auto Agents Framework included:

- v0.2.0 with complex orchestration, multi-character setups, and an enhanced Memory Viewer

- Improved DSN memory persistence and resurrection

- Argu-Mint v2, a proof-of-concept (PoC) demonstrating verifiable on-chain agent memory and learned experiences

Later in the year, the release of AgentOS and the first Auto SDK MCP server further streamlined integration between agents, Auto Drive, and DSN-backed storage, making it easier for developers to connect agent frameworks to permanent, verifiable memory.

Auto Drive and DSN

The DSN matured substantially:

- Auto Drive was launched this year, giving developers a simple way to store and access data on the network. Shortly after launch, it added support for Amazon S3 style workflows, alongside a refreshed interface.

- DSN retrieval optimizations improved cache miss handling and response times, strengthening support for AI, analytics, and archival use cases.

- Research advanced the design for verifiable erasure-coded, on-demand data sharding, positioning Autonomys for orders-of-magnitude throughput improvements as demand scales.

- We also made user-driven enhancements requested by those integrating our stack.

Developer Enablement

To match these infrastructure advances, Autonomys invested in developer enablement:

- The Autonomys Academy was refreshed as a product and architecture learning hub, while the Developer Hub was expanded to serve as the primary technical resource for builders, with deeper documentation, tooling references, and integration guides.

- The Autonomys Core content series clarified vision, farming, agent tooling, storage, and developer pathways.

Together, these initiatives made it easier for developers to understand the architecture, experiment with agents and storage, and build composable applications on top of the Auto Suite.

The economic layer of Autonomys evolved significantly across the year.

Token Transfer Enablement

Token transfers were activated at block 3,585,226, unlocking the network’s economic layer and enabling participating builders to move seamlessly into staking, rewards, and Domain-based applications.

Staking and Decentralization

To encourage a broad and resilient validator and nominator set, the Subspace Foundation launched the Guardians of Growth Boosted-Staking Initiative, allocating 5,000,000 AI3 over approximately twelve months to incentivize operator and nominator participation.

Get Autonomys Network’s stories in your inbox

Join Medium for free to get updates from this writer.

Subscribe

By September, roughly 28 million AI3, about 25 percent of the circulating supply, was staked on the network. Today, that figure has grown to over 34 million, representing approximately one-third of circulating supply.

Exchange Listings and Liquidity

All token listing and liquidity operations were conducted by the Subspace Foundation, preserving separation between protocol development and market operations.

In August, the Foundation coordinated:

- Listings on Kraken, KuCoin, MEXC, BitMart, and XT.com

- Deployment of the Hyperlane bridge on Auto EVM

- Launch of wAI3 with a USDC pool on Uniswap

- Wallet integrations via SubWallet and Talisman

- Deployment of Safe multisig on Auto EVM

These actions established a globally accessible token ecosystem while maintaining clear boundaries between governance, engineering, and market infrastructure.

2025 Ecosystem Snapshot

Autonomys’ ecosystem expanded substantially in 2025 as more teams adopted infrastructure designed for permanence, verifiability, and scalable AI-native execution. Many collaborations deepened earlier engagements, while others represented new integrations across AI data pipelines, agent frameworks, decentralized storage, and DSN-backed verifiable memory.

Autonomys began the year with 30 partners. By December 2025, the ecosystem reached 60 partners and integrations, doubling in size in a single year. Additional integrations are underway and will be announced in early 2026. As we head into the new year, the expanding ecosystem will continue to shape how builders use Autonomys for persistent data, agent execution, and decentralized AI development.

Autonomys’ developer and community base expanded meaningfully as the network matured.

- Hackathons such as HackSecret 5, the Aurora Buildathon, and the Midwest Block-a-thon introduced Autonomys tooling to hundreds of developers.

- The Game of Domains initiatives, including “Crossing the Narrow Sea” and “The Watcher’s Oath” helped secure and optimize XDM and improve staking UX ahead of Phase-2 activation.

- Campaigns such as The Dawn of Autonomy, Spark & Seed, Node Atlas, and the Monthly Community Contests strengthened early contributor engagement and rewarded meaningful participation.

In July, the Subspace Foundation introduced the Ecosystem Grants Program to fund Infrastructure, AI-powered dApps and Agents, Integrations, Research, and Community growth. Designed as a long-term investment in ecosystem health, the program supports the foundational components, applications, and communities that enable the Autonomys Network to grow sustainably. Early applications began arriving immediately following token listings. These initiatives created a pipeline for new projects, improved documentation, and aligned community efforts with the network’s technical roadmap, helping ensure that Autonomys grows in a focused and durable way.

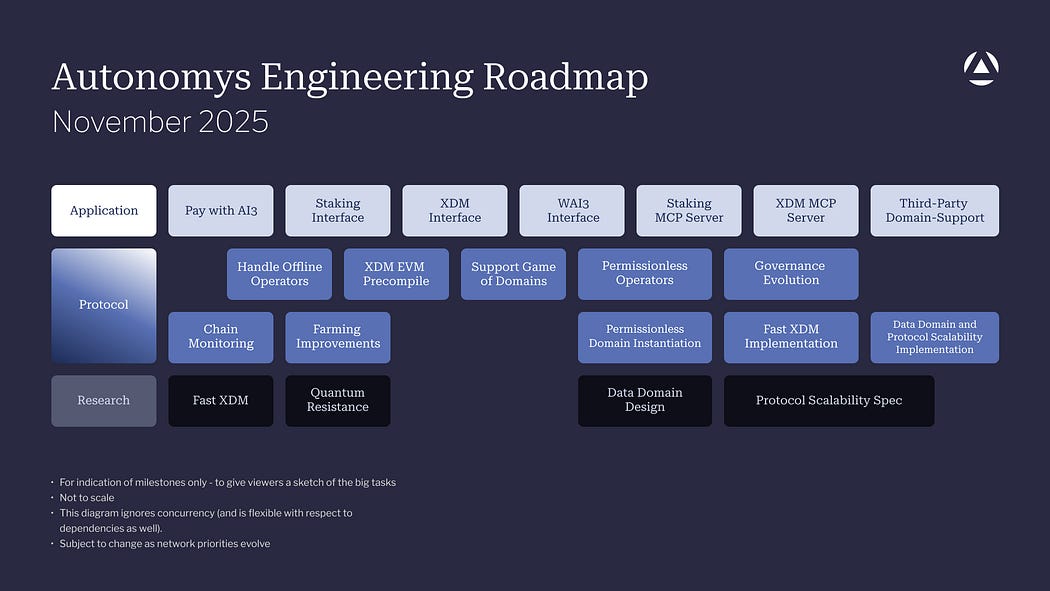

In November, the Autonomys Engineering Roadmap was released, outlining priorities across three tracks: Application, Protocol, and Research.

- Application Layer — the tools and interfaces that users and developers touch.

- Protocol Evolution — the code that shapes the reliability, performance, and decentralization of the network itself.

- Research — the forward-looking work that prepares Autonomys for emerging technical, economic, and cryptographic challenges.

These tracks guide the next stage of network evolution:

- Strengthening the application layer.

- Hardening the protocol for open participation.

- Researching the technologies that will define Autonomys five years from now.

- And preparing the network for permissionless operators and Domains with Game of Domains.

Our ongoing post-quantum cryptography (PQC) research continues in parallel with these roadmap tracks to ensure long-term cryptographic resilience as the network evolves.

2025 was the year the Autonomys Network transformed from a whitepaper into a fully functional network. In a space filled with hype and empty promises, that is no small accomplishment. This was only possible because our farmers, developers, and community members believed it was worth building and stuck around to see it through.

The hard part was never the technology, but getting people to use it. With the technical foundation in place, what matters now is adoption. For 2026, we are focused entirely on building traction, by bringing in projects that need what we built, and showing why it solves real problems. We are putting our resources behind this through our grants program, which will fund infrastructure development, AI-powered applications, and ecosystem integrations. That means targeted outreach to developers that require permanent, verifiable storage.

I’m thankful for all the hard work from our team, ambassadors, and everyone who has supported us over the years. Wishing you a happy holiday season, and a healthy and prosperous 2026.

— Todd Ruoff

[CEO, Autonomys]

[ X ] | [ LinkedIn ]

Autonomys x metaProof: Permanent On-Chain Storage for metaKnyts and The Qriptopian QriptoMedia Codexes

Autonomys is pleased to announce a strategic partnership with metaProof to support permanent, decentralized storage for metaKnyts and The Qriptopian, metaProof’s QriptoMedia publishing initiative.

Through this integration, metaProof is leveraging Auto Drive, Autonomys’ gateway to on-chain permanent storage, to ensure that long-form media, codex artifacts, and evolving narrative layers remain verifiable, tamper-resistant, and accessible over time without reliance on off-chain pinning or custodial infrastructure.

This partnership reflects a shared belief that digital media, cultural artifacts, and knowledge systems benefit from infrastructure designed for longevity, not ephemerality.

Key Aspects of the Partnership

- Auto Drive Integration: metaProof is integrating Auto Drive as the storage layer behind its expanding codex-based media. Auto Drive provides content-addressed, on-chain storage backed by Autonomys’ Distributed Storage Network (DSN), giving metaProof a developer-friendly interface with permanence and verifiability built in by default. This allows complex media structures to be stored and retrieved with the same guarantees as the underlying network itself.

- Supporting QriptoMedia and iQubes: The integration supports metaProof’s Quantum Ready Internet Protocols (Qripto), including iQubes, which package content, data, tools, models, and agents into reusable and auditable primitives. By anchoring these components to permanent storage, metaProof ensures that codex elements such as story layers, artifacts, and interactive media remain intact, referenceable, and composable over time.

- Powering The Qriptopian and metaKnyts Codexes: Auto Drive underpins storage for both The Qriptopian, metaProof’s crypto-agentic magazine, and the metaKnyts Codexes, enabling living publications that can expand, remix, and evolve without sacrificing integrity or provenance. Readers can explore canon, characters, lore, assets, and releases within a unified, magazine-native experience, supported by storage designed for long-term accessibility.

Highlighted Use Cases

metaKnyts

A crypto-comic universe spanning unreleased digital still comics and new motion comic episodes, evolving into a broader transmedia franchise supported by codex-driven discovery.

netaKnyts 21 Sats QriptoGraphic Novel

A 400+ page limited-edition collectible narrative intertwining the metaKnyts universe with the disappearance of Satoshi Nakamoto, enhanced by deeper story artifacts, unlockable experiences, and community participation rewards.

Launch Details

metaProof has confirmed that the metaKnyts Codex and The Qriptopian Codex will launch on December 21, 2025, initially privately to its community of three and a half thousand netaKnyts Reg CF investors, alongside the release of the metaKnyts 21 Sats QriptoGraphic Novel.

The release introduces a storytelling model that pairs a core narrative with a live codex layer, allowing readers to both consume the story and interact with its expanding world in a single, continuous experience.

Autonomys is proud to support this release with infrastructure purpose-built for permanence, verifiability, and long-lived digital media.

About metaProof

metaProof builds Qripto protocols and products that merge knowledge, content, and storytelling with identity, value, and agentic systems — anchored by iQubes and QriptoMedia — to enable durable, composable digital experiences across entertainment and beyond.

X | LinkedIn | Discord | Telegram

About Autonomys

The Autonomys Network — the foundation layer for AI3.0 — is a hyper-scalable decentralized AI (deAI) infrastructure stack encompassing high-throughput permanent distributed storage, data availability and access, and modular execution. Our deAI ecosystem provides all the essential components to build and deploy secure super dApps (AI-powered dApps) and on-chain agents, equipping them with advanced AI capabilities for dynamic and autonomous functionality.

When Quantum Comes Knocking

What is the big deal?

Quantum computers cannot break blockchains today. However, the risk has already begun because attackers do not need a fully capable quantum machine right now to create serious problems in the future. All they need to do is collect blockchain data today and store it.

On many networks, public keys are revealed either as soon as an address is created (in account-based models) or when funds are spent (in UTXO models). Once quantum computers become powerful enough, attackers can use those stored public keys to derive the corresponding private keys for vulnerable schemes and then forge signatures or seize control of old addresses.

This long-term strategy is often called “harvest now, decrypt later,” but “harvest now, derive later” is more accurate in the context of blockchains [1]. The harvesting happens today. The deriving happens years or decades from now. Because blockchain history is publicly replicated across many nodes and archival infrastructure preserves past states indefinitely, anything exposed now will remain visible once quantum computers reach the required capability. This is not a hypothetical concern reserved for the distant future. As a16z crypto observes:

“Post-quantum encryption demands immediate deployment despite its costs: Harvest-Now-Decrypt-Later (HNDL) attacks are already underway, as sensitive data encrypted today will remain valuable when quantum computers do arrive, even if that’s decades from now.” [6]

Why the cryptography used by blockchains today will not survive the quantum era

Most blockchains depend on classical public-key cryptography such as ECDSA, EdDSA, and RSA. These systems are believed to be secure today because no efficient classical algorithms are known for solving the underlying mathematical problems at the key sizes used in practice. For example:

- ECDSA and EdDSA rely on the hardness of the elliptic-curve discrete logarithm problem

- RSA relies on the difficulty of factoring large integers

Quantum computers operate differently. They use quantum states and interference to run certain algorithms much more efficiently than classical machines. The most important in this context is Shor’s algorithm, which provides a polynomial-time method for solving both factoring and discrete logarithm problems on a sufficiently large and stable quantum computer [2].

If such a machine becomes available, the implication is clear: for cryptosystems based on factoring and discrete logarithms, a quantum-capable adversary could compute private keys from public keys. Any public key that has ever appeared on chain becomes a permanent point of potential weakness, because it can be harvested and attacked later if assets or trust still depend on it.

By contrast, symmetric primitives such as block ciphers and hash functions are affected only moderately by known quantum attacks like Grover’s algorithm [3]. These typically require doubling key sizes to maintain comparable security. The critical break occurs at the public-key signature layer, which blockchains use for ownership and transaction authorization.

A simple and accurate explanation of Shor’s algorithm

Shor’s algorithm matters because it changes the cost of breaking the mathematical problems that secure classical public-key cryptography.

For classical computers, the best known algorithms for factoring and discrete logarithms still require super-polynomial time at cryptographic sizes. That makes attacks infeasible in practice and is the main reason current public-key schemes are considered secure today.

A quantum computer can approach these problems differently. It encodes the problem into quantum states that represent many possibilities at once. Shor’s algorithm uses interference patterns among those states to extract the hidden periodic structure behind the problem. Once that structure is known, the remaining steps to factor a number or compute a discrete logarithm become efficient.

In practical terms, a sufficiently powerful quantum computer running Shor’s algorithm could recover private keys from public keys for vulnerable signature schemes. The limitation today is not the algorithm, but the absence of quantum hardware with the scale and stability required to apply it to real-world key sizes.

What “harvest now, derive later” looks like in practice

A useful way to understand this threat is through an analogy that reflects how public-key cryptography works.

Imagine an attacker making a complete copy of an encrypted hard drive. Today, the encryption is strong, and the attacker cannot break it. The copy appears harmless.

But the attacker stores it anyway.

Years later, new technology becomes available that can break that encryption quickly. The attacker no longer needs the original device. The old copy becomes enough to unlock everything that was stored inside.

This mirrors the quantum threat to blockchain. From an attacker’s perspective, public keys and signatures recorded on-chain play a similar role to strongly encrypted data: they are safe today only because inverting the underlying math is computationally infeasible. An adversary can save this data now and wait until a quantum computer can break the assumptions behind ECDSA, EdDSA, or RSA.

At that point, previously collected public keys can be turned into the private keys needed to forge signatures or control old addresses. If valuable assets or trust relationships are still tied to those keys, the damage can be immediate and irreversible.

Why blockchains must prepare before quantum computers mature

Preparing for quantum risk is not about panic, but about timing. As a16z crypto puts it:

“The real challenge in navigating a successful migration to post-quantum cryptography is matching urgency to actual threats.” [6]

Upgrading a decentralized network is intentionally slow. Users need time to migrate keys. Protocols must be redesigned. New signature schemes must be vetted for both security and real-world performance. None of this can occur instantly at the moment quantum hardware becomes capable.

If a public key has already appeared on-chain, that exposure cannot be erased from history. Waiting until quantum computers reach practical capability is too late because the vulnerability arises the moment public keys and signatures are recorded under quantum-vulnerable schemes, not when the first large-scale quantum machine goes online.

A responsible blockchain begins preparing early by:

- Integrating quantum-resistant signature schemes, such as the lattice-based and hash-based constructions being standardized by NIST.

NIST’s 2024 draft guidance (NIST IR 8547) outlines a formal migration roadmap, identifies which classical algorithms are quantum-vulnerable, and specifies approved post-quantum replacements [4]. The draft sets a final deadline of 2035 for fully disallowing vulnerable algorithms across federal systems. External analyses, including the Global Risk Institute’s Quantum Threat Timeline Report, warn that the threat could materialize earlier than expected and that progress may be hidden from full view, which further supports accelerating migration timelines for systems with long-lived data [5]. - Reducing unnecessary public-key exposure in protocol design, for example, by using key-hiding address formats

- Designing protocols with cryptographic agility, so that primitives can be replaced or upgraded in response to evolving standards and threats

- Treating quantum readiness as an ongoing requirement rather than a one-time upgrade

These measures help ensure that by the time quantum computers operate at relevant scales, at whatever point that arrives, there is little of value left tied to keys that can be broken by Shor’s algorithm.

The future rewards early action

Quantum hardware continues to progress. It is not strong enough today to break classical signature schemes at realistic key sizes, but the theoretical tools already exist, and many experts consider large-scale quantum machines a matter of “when,” not “if.”

Because blockchain data is public and long-lived, the decisions made today determine whether that data remains trustworthy in a quantum future.

Long-term data requires long-term cryptography. Networks that begin preparing now, by reducing exposure to quantum-vulnerable keys and adopting quantum-resistant primitives, will be far better positioned to remain secure, reliable, and resilient once quantum computing matures.

Autonomys is committed to this path, continuing to harden its cryptographic foundations and evolve toward quantum-resistant security as part of its long-term network design.

Resources

[1] Subspace Network, “Harvest Now, Derive Later: The Most Underestimated Threat in Blockchain,” 2024.

Available at: https://medium.com/subspace-network/harvest-now-derive-later-the-most-underestimated-threat-in-blockchain-ccad4166e973

[2] P. Shor, “Algorithms for Quantum Computation: Discrete Logarithms and Factoring,” 1994.

Available at: https://arxiv.org/abs/quant-ph/9508027

[3] L. K. Grover, “A Fast Quantum Mechanical Algorithm for Database Search,” 1996.

Available at: https://arxiv.org/abs/quant-ph/9605043

[4] National Institute of Standards and Technology, “NIST IR 8547: Transition to Post-Quantum Cryptography Standards,” Draft, 2024.

Available at: https://csrc.nist.gov/pubs/ir/8547/ipd

[5] Global Risk Institute, “Quantum Threat Timeline Report 2023,” 2023.

Available at: https://www.globalriskinstitute.org/publications/quantum-threat-timeline-report-2023/

[6] a16z Crypto, “Quantum computing and blockchains: Matching urgency to actual threats,” 2025.

Available at: https://a16zcrypto.com/posts/article/quantum-computing-misconceptions-realities-blockchains-planning-migrations/